History of physics

Physics is the science of matter and its behaviour and motion. It is one of the oldest scientific disciplines, perhaps the oldest through its inclusion of astronomy. The first written work of physics with that title was Aristotle's Physics.

Elements of what became physics were drawn primarily from the fields of astronomy, optics, and mechanics, which were methodologically united through the study of geometry. These disciplines began in Antiquity with the Babylonians and with Hellenistic writers such as Archimedes and Ptolemy, then passed on to the Arabic-speaking world where they were critiqued and developed into a more physical and experimental tradition by scientists such as Ibn al-Haytham and Abū Rayhān Bīrūnī,[1][2] before eventually passing on to Western Europe where they were studied by scholars such as Roger Bacon and Witelo. They were thought of as technical in character and many philosophers generally did not perceive their descriptive content as representing a philosophically significant knowledge of the natural world. Similar mathematical traditions also existed in ancient Chinese and Indian sciences.

Meanwhile, philosophy, including what was called “physics”, focused on explanatory (rather than descriptive) schemes developed around the Aristotelian idea of the four types of “causes”. According to Aristotelian and, later, Scholastic physics, things moved in the way that they did because it was part of their essential nature to do so. Celestial objects were thought to move in circles, because perfect circular motion was considered an innate property of objects that existed in the uncorrupted realm of the celestial spheres. The theory of impetus, the ancestor to the concepts of inertia and momentum, also belonged to this philosophical tradition, and was developed by medieval philosophers such as John Philoponus, Avicenna and Jean Buridan. The physical traditions in ancient China and India were also largely philosophical.

In the philosophical tradition of "physics", motions below the lunar sphere were seen as imperfect, and thus could not be expected to exhibit consistent motion. More idealized motion in the “sublunary” realm could only be achieved through artifice, and prior to the 17th century, many philosophers did not view artificial experiments as a valid means of learning about the natural world. Instead, physical explanations in the sublunary realm revolved around tendencies. Stones contained the element earth, and earthy objects tended to move in a straight line toward the center of the universe (which the earth was supposed to be situated around) unless otherwise prevented from doing so. Other physical explanations, which would not later be considered within the bounds of physics, followed similar reasoning. For instance, people tended to think, because people were, by their essential nature, thinking animals.

- Further information: History of astronomy and Aristotelian physics

Emergence of experimental method and physical optics

The use of experiments in the sense of empirical procedures[3] in geometrical optics dates back to second century Roman Egypt, where Ptolemy carried out several early such experiments on reflection, refraction and binocular vision.[4] Due to his Platonic methodological paradigm of "saving the appearances", however, he discarded or rationalized any empirical data that did not support his theories,[5] as the idea of experiment did not hold any importance in Antiquity.[6] The incorrect emission theory of vision thus continued to dominate optics through to the 10th century.

The turn of the second millennium saw the emergence of experimental physics with the development of an experimental method emphasizing the role of experimentation as a form of proof in scientific inquiry, and the development of physical optics where the mathematical discipline of geometrical optics was successfully unified with the philosophical field of physics. The Iraqi physicist, Ibn al-Haytham (Alhazen), is considered a central figure in this shift in physics from a philosophical activity to an experimental and mathematical one, and the shift in optics from a mathematical discipline to a physical and experimental one.[7][8][9][10][11][12] Due to his positivist approach,[13] his Doubts Concerning Ptolemy insisted on scientific demonstration and criticized Ptolemy's confirmation bias and conjectural undemonstrated theories.[14] His Book of Optics (1021) was the earliest successful attempt at unifying a mathematical discipline (geometrical optics) with the philosophical field of physics, to create the modern science of physical optics. An important part of this was the intromission theory of vision, which in order to prove, he developed an experimental method to test his hypothesis.[7][8][9][10][12][15] He conducted various experiments to prove his intromission theory[16] and other hypotheses on light and vision.[17] The Book of Optics established experimentation as the norm of proof in optics,[15] and gave optics a physico-mathematical conception at a much earlier date than the other mathematical disciplines.[18] His On the Light of the Moon also attempted to combine mathematical astronomy with physics, a field now known as astrophysics, to formulate several astronomical hypotheses which he proved through experimentation.[9]

Galileo Galilei and the rise of physico-mathematics

In the 17th century, natural philosophers began to mount a sustained attack on the Scholastic philosophical program, and supposed that mathematical descriptive schemes adopted from such fields as mechanics and astronomy could actually yield universally valid characterizations of motion. The Tuscan mathematician Galileo Galilei was the central figure in the shift to this perspective. As a mathematician, Galileo’s role in the university culture of his era was subordinated to the three major topics of study: law, medicine, and theology (which was closely allied to philosophy). Galileo, however, felt that the descriptive content of the technical disciplines warranted philosophical interest, particularly because mathematical analysis of astronomical observations—notably the radical analysis offered by astronomer Nicolaus Copernicus concerning the relative motions of the sun, earth, moon, and planets—indicated that philosophers’ statements about the nature of the universe could be shown to be in error. Galileo also performed mechanical experiments, and insisted that motion itself—regardless of whether that motion was natural or artificial—had universally consistent characteristics that could be described mathematically.

Galileo used his 1609 telescopic discovery of the moons of Jupiter, as published in his Sidereus Nuncius in 1610, to procure a position in the Medici court with the dual title of mathematician and philosopher. As a court philosopher, he was expected to engage in debates with philosophers in the Aristotelian tradition, and received a large audience for his own publications, such as The Assayer and Discourses and Mathematical Demonstrations Concerning Two New Sciences, which was published abroad after he was placed under house arrest for his publication of Dialogue Concerning the Two Chief World Systems in 1632.[19][20]

Galileo’s interest in the mechanical experimentation and mathematical description in motion established a new natural philosophical tradition focused on experimentation. This tradition, combining with the non-mathematical emphasis on the collection of "experimental histories" by philosophical reformists such as William Gilbert and Francis Bacon, drew a significant following in the years leading up to and following Galileo’s death, including Evangelista Torricelli and the participants in the Accademia del Cimento in Italy; Marin Mersenne and Blaise Pascal in France; Christiaan Huygens in the Netherlands; and Robert Hooke and Robert Boyle in England.

The Cartesian philosophy of motion

The French philosopher René Descartes was well-connected to, and influential within, the experimental philosophy networks. Descartes had a more ambitious agenda, however, which was geared toward replacing the Scholastic philosophical tradition altogether. Questioning the reality interpreted through the senses, Descartes sought to reestablish philosophical explanatory schemes by reducing all perceived phenomena to being attributable to the motion of an invisible sea of “corpuscles”. (Notably, he reserved human thought and God from his scheme, holding these to be separate from the physical universe). In proposing this philosophical framework, Descartes supposed that different kinds of motion, such as that of planets versus that of terrestrial objects, were not fundamentally different, but were merely different manifestations of an endless chain of corpuscular motions obeying universal principles. Particularly influential were his explanation for circular astronomical motions in terms of the vortex motion of corpuscles in space (Descartes argued, in accord with the beliefs, if not the methods, of the Scholastics, that a vacuum could not exist), and his explanation of gravity in terms of corpuscles pushing objects downward.[21][22][23]

- Further information: Mechanical explanations of gravitation

Descartes, like Galileo, was convinced of the importance of mathematical explanation, and he and his followers were key figures in the development of mathematics and geometry in the 17th century. Cartesian mathematical descriptions of motion held that all mathematical formulations had to be justifiable in terms of direct physical action, a position held by Huygens and the German philosopher Gottfried Leibniz, who, while following in the Cartesian tradition, developed his own philosophical alternative to Scholasticism, which he outlined in his 1714 work, The Monadology.

Newtonian motion versus Cartesian motion

In the late 17th and early 18th centuries, the Cartesian mechanical tradition was challenged by another philosophical tradition established by the Cambridge University mathematician Isaac Newton. Where Descartes held that all motions should be explained with respect to the immediate force exerted by corpuscles, Newton chose to describe universal motion with reference to a set of fundamental mathematical principles: his three laws of motion and the law of gravitation, which he introduced in his 1687 work Mathematical Principles of Natural Philosophy. Using these principles, Newton removed the idea that objects followed paths determined by natural shapes (such as Kepler’s idea that planets moved naturally in ellipses), and instead demonstrated that not only regularly observed paths, but all the future motions of any body could be deduced mathematically based on knowledge of their existing motion, their mass, and the forces acting upon them. However, observed celestial motions did not precisely conform to a Newtonian treatment, and Newton, who was also deeply interested in theology, imagined that God intervened to ensure the continued stability of the solar system.

Newton’s principles (but not his mathematical treatments) proved controversial with Continental philosophers, who found his lack of metaphysical explanation for movement and gravitation philosophically unacceptable. Beginning around 1700, a bitter rift opened between the Continental and British philosophical traditions, which were stoked by heated, ongoing, and viciously personal disputes between the followers of Newton and Leibniz concerning priority over the analytical techniques of calculus, which each had developed independently. Initially, the Cartesian and Leibnizian traditions prevailed on the Continent (leading to the dominance of the Leibnizian calculus notation everywhere except Britain). Newton himself remained privately disturbed at the lack of a philosophical understanding of gravitation, while insisting in his writings that none was necessary to infer its reality. As the 18th century progressed, Continental natural philosophers increasingly accepted the Newtonians’ willingness to forgo ontological metaphysical explanations for mathematically described motions.[24][25][26]

Rational mechanics in the 18th century

The mathematical analytical traditions established by Newton and Leibniz flourished during the 18th century as more mathematicians learned calculus and elaborated upon its initial formulation. The application of mathematical analysis to problems of motion was known as rational mechanics, or mixed mathematics (and was later termed classical mechanics). This work primarily revolved around celestial mechanics, although other applications were also developed, such as the Swiss mathematician Daniel Bernoulli’s treatment of fluid dynamics, which he introduced in his 1738 work Hydrodynamica.[27]

Rational mechanics dealt primarily with the development of elaborate mathematical treatments of observed motions, using Newtonian principles as a basis, and emphasized improving the tractability of complex calculations and developing of legitimate means of analytical approximation. By the end of the century analytical treatments were rigorous enough to verify the stability of the solar system solely on the basis of Newton’s laws without reference to divine intervention—even as deterministic treatments of systems as simple as the three body problem in gravitation remained intractable.[28]

British work, carried on by mathematicians such as Brook Taylor and Colin Maclaurin, fell behind Continental developments as the century progressed. Meanwhile, work flourished at scientific academies on the Continent, led by such mathematicians as Daniel Bernoulli, Leonhard Euler, Joseph-Louis Lagrange, Pierre-Simon Laplace, and Adrien-Marie Legendre. At the end of the century, the members of the French Academy of Sciences had attained clear dominance in the field.[29][30][31][32]

Physical experimentation in the 18th and early 19th centuries

At the same time, the experimental tradition established by Galileo and his followers persisted. The Royal Society and the French Academy of Sciences were major centers for the performance and reporting of experimental work, and Newton was himself an influential experimenter, particularly in the field of optics, where he was recognized for his prism experiments dividing white light into its constituent spectrum of colors, as published in his 1704 book Opticks (which also advocated a particulate interpretation of light). Experiments in mechanics, optics, magnetism, static electricity, chemistry, and physiology were not clearly distinguished from each other during the 18th century, but significant differences in explanatory schemes and, thus, experiment design were emerging. Chemical experimenters, for instance, defied attempts to enforce a scheme of abstract Newtonian forces onto chemical affiliations, and instead focused on the isolation and classification of chemical substances and reactions.[33]

Nevertheless, the separate fields remained tied together, most clearly through the theories of weightless “imponderable fluids", such as heat (“caloric”), electricity, and phlogiston (which was rapidly overthrown as a concept following Lavoisier’s identification of oxygen gas late in the century). Assuming that these concepts were real fluids, their flow could be traced through a mechanical apparatus or chemical reactions. This tradition of experimentation led to the development of new kinds of experimental apparatus, such as the Leyden Jar and the Voltaic Pile; and new kinds of measuring instruments, such as the calorimeter, and improved versions of old ones, such as the thermometer. Experiments also produced new concepts, such as the University of Glasgow experimenter Joseph Black’s notion of latent heat and Philadelphia intellectual Benjamin Franklin’s characterization of electrical fluid as flowing between places of excess and deficit (a concept later reinterpreted in terms of positive and negative charges).

While it was recognized early in the 18th century that finding absolute theories of electrostatic and magnetic force akin to Newton’s principles of motion would be an important achievement, none were forthcoming. This impossibility only slowly disappeared as experimental practice became more widespread and more refined in the early years of the 19th century in places such as the newly-established Royal Institution in London, where John Dalton argued for an atomistic interpretation of chemistry, Thomas Young argued for the interpretation of light as a wave, and Michael Faraday established the phenomenon of electromagnetic induction. Meanwhile, the analytical methods of rational mechanics began to be applied to experimental phenomena, most influentially with the French mathematician Joseph Fourier’s analytical treatment of the flow of heat, as published in 1822.[34][35][36]

Thermodynamics, statistical mechanics, and electromagnetic theory

The establishment of a mathematical physics of energy between the 1850s and the 1870s expanded substantially on the physics of prior eras and challenged traditional ideas about how the physical world worked. While Pierre-Simon Laplace’s work on celestial mechanics solidified a deterministically mechanistic view of objects obeying fundamental and totally reversible laws, the study of energy and particularly the flow of heat, threw this view of the universe into question. Drawing upon the engineering theory of Lazare and Sadi Carnot, and Émile Clapeyron; the experimentation of James Prescott Joule on the interchangeability of mechanical, chemical, thermal, and electrical forms of work; and his own Cambridge mathematical tripos training in mathematical analysis; the Glasgow physicist William Thomson and his circle of associates established a new mathematical physics relating to the exchange of different forms of energy and energy’s overall conservation (what is still accepted as the “first law of thermodynamics”). Their work was soon allied with the theories of similar but less-known work by the German physician Julius Robert von Mayer and physicist and physiologist Hermann von Helmholtz on the conservation of forces.

Taking his mathematical cues from the heat flow work of Joseph Fourier (and his own religious and geological convictions), Thomson believed that the dissipation of energy with time (what is accepted as the “second law of thermodynamics”) represented a fundamental principle of physics, which was expounded in Thomson and Peter Guthrie Tait’s influential work Treatise on Natural Philosophy. However, other interpretations of what Thomson called thermodynamics were established through the work of the German physicist Rudolf Clausius. His statistical mechanics, which was elaborated upon by Ludwig Boltzmann and the British physicist James Clerk Maxwell, held that energy (including heat) was a measure of the speed of particles. Interrelating the statistical likelihood of certain states of organization of these particles with the energy of those states, Clausius reinterpreted the dissipation of energy to be the statistical tendency of molecular configurations to pass toward increasingly likely, increasingly disorganized states (coining the term “entropy” to describe the disorganization of a state). The statistical versus absolute interpretations of the second law of thermodynamics set up a dispute that would last for several decades (producing arguments such as “Maxwell's demon”), and that would not be held to be definitively resolved until the behavior of atoms was firmly established in the early 20th century.[37][38]

- Further information: history of thermodynamics

Meanwhile, the new physics of energy transformed the analysis of electromagnetic phenomena, particularly through the introduction of the concept of the field and the publication of Maxwell’s 1873 Treatise on Electricity and Magnetism, which also drew upon theoretical work by German theoreticians such as Carl Friedrich Gauss and Wilhelm Weber. The encapsulation of heat in particulate motion, and the addition of electromagnetic forces to Newtonian dynamics established an enormously robust theoretical underpinning to physical observations. The prediction that light represented a transmission of energy in wave form through a “luminiferous ether”, and the seeming confirmation of that prediction with Helmholtz student Heinrich Hertz’s 1888 detection of electromagnetic radiation, was a major triumph for physical theory and raised the possibility that even more fundamental theories based on the field could soon be developed.[39][40][41][42] Research on the transmission of electromagnetic waves began soon after, with the experiments conducted by physicists such as Nikola Tesla, Jagadish Chandra Bose and Guglielmo Marconi during the 1890s leading to the invention of radio.

The emergence of a new physics circa 1900

The triumph of Maxwell’s theories was undermined by inadequacies that had already begun to appear. The Michelson-Morley experiment failed to detect a shift in the speed of light, which would have been expected as the earth moved at different angles with respect to the ether. The possibility explored by Hendrik Lorentz, that the ether could compress matter, thereby rendering it undetectable, presented problems of its own as a compressed electron (detected in 1897 by British experimentalist J. J. Thomson) would prove unstable. Meanwhile, other experimenters began to detect unexpected forms of radiation: Wilhelm Röntgen caused a sensation with his discovery of x-rays in 1895; in 1896 Henri Becquerel discovered that certain kinds of matter emit radiation on their own accord. Marie and Pierre Curie coined the term “radioactivity” to describe this property of matter, and isolated the radioactive elements radium and polonium. Ernest Rutherford and Frederick Soddy identified two of Becquerel’s forms of radiation with electrons and the element helium. In 1911 Rutherford established that the bulk of mass in atoms are concentrated in positively-charged nuclei with orbiting electrons, which was a theoretically unstable configuration. Studies of radiation and radioactive decay continued to be a preeminent focus for physical and chemical research through the 1930s, when the discovery of nuclear fission opened the way to the practical exploitation of what came to be called “atomic” energy.

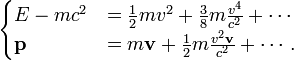

Radical new physical theories also began to emerge in this same period. In 1905 Albert Einstein, then a Bern patent clerk, argued that the speed of light was a constant in all inertial reference frames and that electromagnetic laws should remain valid independent of reference frame—assertions which rendered the ether “superfluous” to physical theory, and that held that observations of time and length varied relative to how the observer was moving with respect to the object being measured (what came to be called the “special theory of relativity”). It also followed that mass and energy were interchangeable quantities according to the equation E=mc2. In another paper published the same year, Einstein asserted that electromagnetic radiation was transmitted in discrete quantities (“quanta”), according to a constant that the theoretical physicist Max Planck had posited in 1900 to arrive at an accurate theory for the distribution of blackbody radiation—an assumption that explained the strange properties of the photoelectric effect. The Danish physicist Niels Bohr used this same constant in 1913 to explain the stability of Rutherford’s atom as well as the frequencies of light emitted by hydrogen gas.

- Further information: History of special relativity

The radical years: general relativity and quantum mechanics

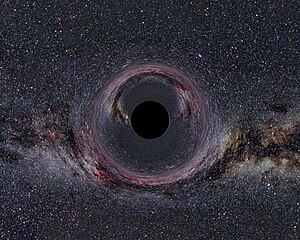

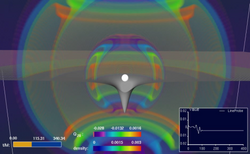

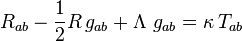

The gradual acceptance of Einstein’s theories of relativity and the quantized nature of light transmission, and of Niels Bohr’s model of the atom created as many problems as they solved, leading to a full-scale effort to reestablish physics on new fundamental principles. Expanding relativity to cases of accelerating reference frames (the “general theory of relativity”) in the 1910s, Einstein posited an equivalence between the inertial force of acceleration and the force of gravity, leading to the conclusion that space is curved and finite in size, and the prediction of such phenomena as gravitational lensing and the distortion of time in gravitational fields.

- Further information: History of general relativity

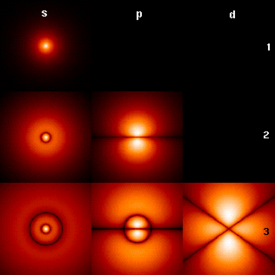

The quantized theory of the atom gave way to a full-scale quantum mechanics in the 1920s. The quantum theory (which previously relied in the “correspondence” at large scales between the quantized world of the atom and the continuities of the “classical” world) was accepted when the Compton Effect established that light carries momentum and can scatter off particles, and when Louis de Broglie asserted that matter can be seen as behaving as a wave in much the same way as electromagnetic waves behave like particles (wave-particle duality). New principles of a “quantum” rather than a “classical” mechanics, formulated in matrix-form by Werner Heisenberg, Max Born, and Pascual Jordan in 1925, were based on the probabilistic relationship between discrete “states” and denied the possibility of causality. Erwin Schrödinger established an equivalent theory based on waves in 1926; but Heisenberg’s 1927 “uncertainty principle” (indicating the impossibility of precisely and simultaneously measuring position and momentum) and the “Copenhagen interpretation” of quantum mechanics (named after Bohr’s home city) continued to deny the possibility of fundamental causality, though opponents such as Einstein would assert that “God does not play dice with the universe”.[43] Also in the 1920s, Satyendra Nath Bose's work on photons and quantum mechanics provided the foundation for Bose-Einstein statistics, the theory of the Bose-Einstein condensate, and the discovery of the boson.

- Further information: history of quantum mechanics

Constructing a new fundamental physics

As the philosophically inclined continued to debate the fundamental nature of the universe, quantum theories continued to be produced, beginning with Paul Dirac’s formulation of a relativistic quantum theory in 1927. However, attempts to quantize electromagnetic theory entirely were stymied throughout the 1930s by theoretical formulations yielding infinite energies. This situation was not considered adequately resolved until after World War II ended, when Julian Schwinger, Richard Feynman, and Sin-Itiro Tomonaga independently posited the technique of “renormalization”, which allowed for an establishment of a robust quantum electrodynamics (Q.E.D.).[44]

Meanwhile, new theories of fundamental particles proliferated with the rise of the idea of the quantization of fields through “exchange forces” regulated by an exchange of short-lived “virtual” particles, which were allowed to exist according to the laws governing the uncertainties inherent in the quantum world. Notably, Hideki Yukawa proposed that the positive charges of the nucleus were kept together courtesy of a powerful but short-range force mediated by a particle intermediate in mass between the size of an electron and a proton. This particle, called the “pion”, was identified in 1947, but it was part of a slew of particle discoveries beginning with the neutron, the “positron” (a positively-charged “antimatter” version of the electron), and the “muon” (a heavier relative to the electron) in the 1930s, and continuing after the war with a wide variety of other particles detected in various kinds of apparatus: cloud chambers, nuclear emulsions, bubble chambers, and coincidence counters. At first these particles were found primarily by the ionized trails left by cosmic rays, but were increasingly produced in newer and more powerful particle accelerators.[45]

The interaction of these particles by “scattering” and “decay” provided a key to new fundamental quantum theories. Murray Gell-Mann and Yuval Ne'eman brought some order to these new particles by classifying them according to certain qualities, beginning with what Gell-Mann referred to as the “Eightfold Way”, but proceeding into several different “octets” and “decuplets” which could predict new particles, most famously the Ω−, which was detected at Brookhaven National Laboratory in 1964, and which gave rise to the “quark” model of hadron composition. While the quark model at first seemed inadequate to describe strong nuclear forces, allowing the temporary rise of competing theories such as the S-Matrix, the establishment of quantum chromodynamics in the 1970s finalized a set of fundamental and exchange particles, which allowed for the establishment of a “standard model” based on the mathematics of gauge invariance, which successfully described all forces except for gravity, and which remains generally accepted within the domain to which it is designed to be applied.[46]

The “standard model” groups the electroweak interaction theory and quantum chromodynamics into a structure denoted by the gauge group SU(3)×SU(2)×U(1). The formulation of the unification of the electromagnetic and weak interactions in the standard model is due to Abdus Salam, Steven Weinberg and, subsequently, Sheldon Glashow. After the discovery, made at CERN, of the existence of neutral weak currents,[47][48][49][50] mediated by the Z boson foreseen in the standard model, the physicists Salam, Glashow and Weinberg received the 1979 Nobel Prize in Physics for their electroweak theory.[51]

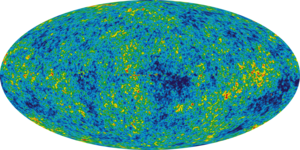

While accelerators have confirmed most aspects of the standard model by detecting expected particle interactions at various collision energies, no theory reconciling the general theory of relativity with the standard model has yet been found, although “string theory” has provided one promising avenue forward. Since the 1970s, fundamental particle physics has provided insights into early universe cosmology, particularly the “big bang” theory proposed as a consequence of Einstein’s general theory. However, starting from the 1990s, astronomical observations have also provided new challenges, such as the need for new explanations of galactic stability (the problem of dark matter), and accelerating expansion of the universe (the problem of dark energy).

The physical sciences

With increased accessibility to and elaboration upon advanced analytical techniques in the 19th century, physics was defined as much, if not more, by those techniques than by the search for universal principles of motion and energy, and the fundamental nature of matter. Fields such as acoustics, geophysics, astrophysics, aerodynamics, plasma physics, low-temperature physics, and solid-state physics joined optics, fluid dynamics, electromagnetism, and mechanics as areas of physical research. In the 20th century, physics also became closely allied with such fields as electrical, aerospace, and materials engineering, and physicists began to work in government and industrial laboratories as much as in academic settings. Following World War II, the population of physicists increased dramatically, and came to be centered on the United States, while, in more recent decades, physics has become a more international pursuit than at any time in its previous history.

Classical mechanics

| Classical mechanics | ||||

History of ...

| ||||

Classical mechanics is used for describing the motion of macroscopic objects, from projectiles to parts of machinery, as well as astronomical objects, such as spacecraft, planets, stars, and galaxies. It produces very accurate results within these domains, and is one of the oldest and largest subjects in science, engineering and technology.

Besides this, many related specialties exist, dealing with gases, liquids, and solids, and so on. Classical mechanics is enhanced by special relativity for objects moving with high velocity, approaching the speed of light; general relativity is employed to handle gravitation at a deeper level; and quantum mechanics handles the wave-particle duality of atoms and molecules.

In physics, classical mechanics is one of the two major sub-fields of study in the science of mechanics, which is concerned with the set of physical laws governing and mathematically describing the motions of bodies and aggregates of bodies. The other sub-field is quantum mechanics.

The term classical mechanics was coined in the early 20th century to describe the system of mathematical physics begun by Isaac Newton and many contemporary 17th century workers, building upon the earlier astronomical theories of Johannes Kepler, which in turn were based on the precise observations of Tycho Brahe and the studies of terrestrial projectile motion of Galileo, but before the development of quantum physics and relativity. Therefore, some sources exclude so-called "relativistic physics" from that category. However, a number of modern sources do include Einstein's mechanics, which in their view represents classical mechanics in its most developed and most accurate form. The initial stage in the development of classical mechanics is often referred to as Newtonian mechanics, and is associated with the physical concepts employed by and the mathematical methods invented by Newton himself, in parallel with Leibniz, and others. This is further described in the following sections. More abstract and general methods include Lagrangian mechanics and Hamiltonian mechanics. Much of the content of classical mechanics was created in the 18th and 19th centuries and extends considerably beyond (particularly in its use of analytical mathematics) the work of Newton.

Contents |

Description of the theory

The following introduces the basic concepts of classical mechanics. For simplicity, it often models real-world objects as point particles, objects with negligible size. The motion of a point particle is characterized by a small number of parameters: its position, mass, and the forces applied to it. Each of these parameters is discussed in turn.

In reality, the kind of objects which classical mechanics can describe always have a non-zero size. (The physics of very small particles, such as the electron, is more accurately described by quantum mechanics). Objects with non-zero size have more complicated behavior than hypothetical point particles, because of the additional degrees of freedom—for example, a baseball can spin while it is moving. However, the results for point particles can be used to study such objects by treating them as composite objects, made up of a large number of interacting point particles. The center of mass of a composite object behaves like a point particle.

[edit] Displacement and its derivatives

| The SI derived units with kg, m and s | |

| displacement | m |

| speed | m s−1 |

| acceleration | m s−2 |

| jerk | m s−3 |

| specific energy | m² s−2 |

| absorbed dose rate | m² s−3 |

| moment of inertia | kg m² |

| momentum | kg m s−1 |

| angular momentum | kg m² s−1 |

| force | kg m s−2 |

| torque | kg m² s−2 |

| energy | kg m² s−2 |

| power | kg m² s−3 |

| pressure | kg m−1 s−2 |

| surface tension | kg s−2 |

| irradiance | kg s−3 |

| kinematic viscosity | m² s−1 |

| dynamic viscosity | kg m−1 s |

The displacement, or position, of a point particle is defined with respect to an arbitrary fixed reference point, O, in space, usually accompanied by a coordinate system, with the reference point located at the origin of the coordinate system. It is defined as the vector r from O to the particle. In general, the point particle need not be stationary relative to O, so r is a function of t, the time elapsed since an arbitrary initial time. In pre-Einstein relativity (known as Galilean relativity), time is considered an absolute, i.e., the time interval between any given pair of events is the same for all observers. In addition to relying on absolute time, classical mechanics assumes Euclidean geometry for the structure of space.[1]

[edit] Velocity and speed

The velocity, or the rate of change of position with time, is defined as the derivative of the position with respect to time or

.

.

In classical mechanics, velocities are directly additive and subtractive. For example, if one car traveling East at 60 km/h passes another car traveling East at 50 km/h, then from the perspective of the slower car, the faster car is traveling east at 60 − 50 = 10 km/h. Whereas, from the perspective of the faster car, the slower car is moving 10 km/h to the West. Velocities are directly additive as vector quantities; they must be dealt with using vector analysis.

Mathematically, if the velocity of the first object in the previous discussion is denoted by the vector  and the velocity of the second object by the vector

and the velocity of the second object by the vector  where u is the speed of the first object, v is the speed of the second object, and

where u is the speed of the first object, v is the speed of the second object, and  and

and  are unit vectors in the directions of motion of each particle respectively, then the velocity of the first object as seen by the second object is:

are unit vectors in the directions of motion of each particle respectively, then the velocity of the first object as seen by the second object is:

Similarly:

When both objects are moving in the same direction, this equation can be simplified to:

Or, by ignoring direction, the difference can be given in terms of speed only:

[edit] Acceleration

The acceleration, or rate of change of velocity, is the derivative of the velocity with respect to time (the second derivative of the position with respect to time) or

.

.

Acceleration can arise from a change with time of the magnitude of the velocity or of the direction of the velocity or both. If only the magnitude, v, of the velocity decreases, this is sometimes referred to as deceleration, but generally any change in the velocity with time, including deceleration, is simply referred to as acceleration.

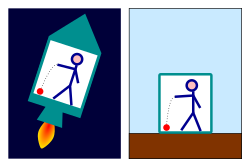

Frames of reference

While the position and velocity and acceleration of a particle can be referred to any observer in any state of motion, classical mechanics assumes the existence of a special family of reference frames in terms of which the mechanical laws of nature take a comparatively simple form. These special reference frames are called inertial frames. They are characterized by the absence of acceleration of the observer and the requirement that all forces entering the observer's physical laws originate in identifiable sources (charges, gravitational bodies, and so forth). A non-inertial reference frame is one accelerating with respect to an inertial one, and in such a non-inertial frame a particle is subject to acceleration by fictitious forces that enter the equations of motion solely as a result of its accelerated motion, and do not originate in identifiable sources. These fictitious forces are in addition to the real forces recognized in an inertial frame. A key concept of inertial frames is the method for identifying them. (See inertial frame of reference for a discussion.) For practical purposes, reference frames that are unaccelerated with respect to the distant stars are regarded as good approximations to inertial frames.

The following consequences can be derived about the perspective of an event in two inertial reference frames, S and S', where S' is traveling at a relative velocity of  to S.

to S.

(the velocity

(the velocity  of a particle from the perspective of S' is slower by

of a particle from the perspective of S' is slower by  than its velocity

than its velocity  from the perspective of S)

from the perspective of S) (the acceleration of a particle remains the same regardless of reference frame)

(the acceleration of a particle remains the same regardless of reference frame) (the force on a particle remains the same regardless of reference frame)

(the force on a particle remains the same regardless of reference frame)- the speed of light is not a constant in classical mechanics, nor does the special position given to the speed of light in relativistic mechanics have a counterpart in classical mechanics.

- the form of Maxwell's equations is not preserved across such inertial reference frames. However, in Einstein's theory of special relativity, the assumed constancy (invariance) of the vacuum speed of light alters the relationships between inertial reference frames so as to render Maxwell's equations invariant.

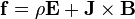

Forces; Newton's Second Law

Newton was the first to mathematically express the relationship between force and momentum. Some physicists interpret Newton's second law of motion as a definition of force and mass, while others consider it to be a fundamental postulate, a law of nature. Either interpretation has the same mathematical consequences, historically known as "Newton's Second Law":

.

.

The quantity  is called the (canonical) momentum. The net force on a particle is, thus, equal to rate change of momentum of the particle with time. Since the definition of acceleration is

is called the (canonical) momentum. The net force on a particle is, thus, equal to rate change of momentum of the particle with time. Since the definition of acceleration is  , when the mass of the object is fixed, for example, when the mass variation with velocity found in special relativity is negligible (an implicit approximation in Newtonian mechanics), Newton's law can be written in the simplified and more familiar form

, when the mass of the object is fixed, for example, when the mass variation with velocity found in special relativity is negligible (an implicit approximation in Newtonian mechanics), Newton's law can be written in the simplified and more familiar form

.

.

So long as the force acting on a particle is known, Newton's second law is sufficient to describe the motion of a particle. Once independent relations for each force acting on a particle are available, they can be substituted into Newton's second law to obtain an ordinary differential equation, which is called the equation of motion.

As an example, assume that friction is the only force acting on the particle, and that it may be modeled as a function of the velocity of the particle, for example:

with λ a positive constant.. Then the equation of motion is

.

.

This can be integrated to obtain

where  is the initial velocity. This means that the velocity of this particle decays exponentially to zero as time progresses. In this case, an equivalent viewpoint is that the kinetic energy of the particle is absorbed by friction (which converts it to heat energy in accordance with the conservation of energy), slowing it down. This expression can be further integrated to obtain the position

is the initial velocity. This means that the velocity of this particle decays exponentially to zero as time progresses. In this case, an equivalent viewpoint is that the kinetic energy of the particle is absorbed by friction (which converts it to heat energy in accordance with the conservation of energy), slowing it down. This expression can be further integrated to obtain the position  of the particle as a function of time.

of the particle as a function of time.

Important forces include the gravitational force and the Lorentz force for electromagnetism. In addition, Newton's third law can sometimes be used to deduce the forces acting on a particle: if it is known that particle A exerts a force  on another particle B, it follows that B must exert an equal and opposite reaction force, -

on another particle B, it follows that B must exert an equal and opposite reaction force, - , on A. The strong form of Newton's third law requires that

, on A. The strong form of Newton's third law requires that  and -

and - act along the line connecting A and B, while the weak form does not. Illustrations of the weak form of Newton's third law are often found for magnetic forces.

act along the line connecting A and B, while the weak form does not. Illustrations of the weak form of Newton's third law are often found for magnetic forces.

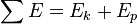

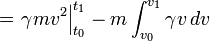

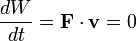

Energy

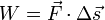

If a force  is applied to a particle that achieves a displacement

is applied to a particle that achieves a displacement  , the work done by the force is defined as the scalar product of force and displacement vectors:

, the work done by the force is defined as the scalar product of force and displacement vectors:

.

.

If the mass of the particle is constant, and Wtotal is the total work done on the particle, obtained by summing the work done by each applied force, from Newton's second law:

,

,

where Ek is called the kinetic energy. For a point particle, it is mathematically defined as the amount of work done to accelerate the particle from zero velocity to the given velocity v:

.

.

For extended objects composed of many particles, the kinetic energy of the composite body is the sum of the kinetic energies of the particles.

A particular class of forces, known as conservative forces, can be expressed as the gradient of a scalar function, known as the potential energy and denoted Ep:

.

.

If all the forces acting on a particle are conservative, and Ep is the total potential energy (which is defined as a work of involved forces to rearrange mutual positions of bodies), obtained by summing the potential energies corresponding to each force

|

|  . . |

This result is known as conservation of energy and states that the total energy,

is constant in time. It is often useful, because many commonly encountered forces are conservative.

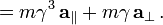

Beyond Newton's Laws

Classical mechanics also includes descriptions of the complex motions of extended non-pointlike objects. The concepts of angular momentum rely on the same calculus used to describe one-dimensional motion.

There are two important alternative formulations of classical mechanics: Lagrangian mechanics and Hamiltonian mechanics. These, and other modern formulations, usually bypass the concept of "force", instead referring to other physical quantities, such as energy, for describing mechanical systems.

[edit] Classical transformations

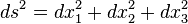

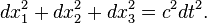

Consider two reference frames S and S' . For observers in each of the reference frames an event has space-time coordinates of (x,y,z,t) in frame S and (x' ,y' ,z' ,t' ) in frame S' . Assuming time is measured the same in all reference frames, and if we require x = x' when t = 0, then the relation between the space-time coordinates of the same event observed from the reference frames S' and S, which are moving at a relative velocity of u in the x direction is:

- x' = x - ut

- y' = y

- z' = z

- t' = t

This set of formulas defines a group transformation known as the Galilean transformation (informally, the Galilean transform). This group is a limiting case of the Poincaré group used in special relativity. The limiting case applies when the velocity u is very small compared to c, the speed of light.

For some problems, it is convenient to use rotating coordinates (reference frames). Thereby one can either keep a mapping to a convenient inertial frame, or introduce additionally a fictitious centrifugal force and Coriolis force.

History

- See also: Timeline of classical mechanics

Some Greek philosophers of antiquity, among them Aristotle, may have been the first to maintain the idea that "everything happens for a reason" and that theoretical principles can assist in the understanding of nature. While, to a modern reader, many of these preserved ideas come forth as eminently reasonable, there is a conspicuous lack of both mathematical theory and controlled experiment, as we know it. These both turned out to be decisive factors in forming modern science, and they started out with classical mechanics.

An early experimental scientific method was introduced into mechanics in the 11th century by al-Biruni, who along with al-Khazini in the 12th century, unified statics and dynamics into the science of mechanics, and combined the fields of hydrostatics with dynamics to create the field of hydrodynamics.[2] Concepts related to Newton's laws of motion were also enunciated by several other Muslim physicists during the Middle Ages. Early versions of the law of inertia, known as Newton's first law of motion, and the concept relating to momentum, part of Newton's second law of motion, were described by Ibn al-Haytham (Alhacen)[3][4] and Avicenna.[5][6] The proportionality between force and acceleration, an important principle in classical mechanics, was first stated by Hibat Allah Abu'l-Barakat al-Baghdaadi,[7] and theories on gravity were developed by Ja'far Muhammad ibn Mūsā ibn Shākir,[8] Ibn al-Haytham,[9] and al-Khazini.[10] It is known that Galileo Galilei's mathematical treatment of acceleration and his concept of impetus[11] grew out of earlier medieval analyses of motion, especially those of Avicenna,[5] Ibn Bajjah,[12] and Jean Buridan.

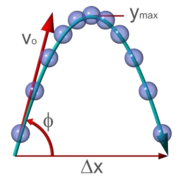

The first published causal explanation of the motions of planets was Johannes Kepler's Astronomia nova published in 1609. He concluded, based on Tycho Brahe's observations of the orbit of Mars, that the orbits were ellipses. This break with ancient thought was happening around the same time that Galilei was proposing abstract mathematical laws for the motion of objects. He may (or may not) have performed the famous experiment of dropping two cannon balls of different masses from the tower of Pisa, showing that they both hit the ground at the same time. The reality of this experiment is disputed, but, more importantly, he did carry out quantitative experiments by rolling balls on an inclined plane. His theory of accelerated motion derived from the results of such experiments, and forms a cornerstone of classical mechanics.

As foundation for his principles of natural philosophy, Newton proposed three laws of motion, the law of inertia, his second law of acceleration, mentioned above, and the law of action and reaction, and hence laying the foundations for classical mechanics. Both Newtons second and third laws were given proper scientific and mathematical treatment in Newton's Philosophiæ Naturalis Principia Mathematica, which distinguishes them from earlier attempts at explaining similar phenomena, which were either incomplete, incorrect, or given little accurate mathematical expression. Newton also enunciated the principles of conservation of momentum and angular momentum. In Mechanics, Newton was also the first to provide the first correct scientific and mathematical formulation of gravity in Newton's law of universal gravitation. The combination of Newton's laws of motion and gravitation provide the fullest and most accurate description of classical mechanics. He demonstrated that these laws apply to everyday objects as well as to celestial objects. In particular, he obtained a theoretical explanation of Kepler's laws of motion of the planets.

Newton previously invented the calculus, of mathematics, and used it to perform the mathematical calculations. For acceptability, his book, the Principia, was formulated entirely in terms of the long established geometric methods, which were soon to be eclipsed by his calculus. However it was Leibniz who developed the notation of the derivative and integral preferred today.

Newton, and most of his contemporaries, with the notable exception of Huygens, worked on the assumption that classical mechanics would be able to explain all phenomena, including light, in the form of geometric optics. Even when discovering the so-called Newton's rings (a wave interference phenomenon) his explanation remained with his own corpuscular theory of light.

After Newton, classical mechanics became a principal field of study in mathematics as well as physics.

Some difficulties were discovered in the late 19th century that could only be resolved by more modern physics. Some of these difficulties related to compatibility with electromagnetic theory, and the famous Michelson-Morley experiment. The resolution of these problems led to the special theory of relativity, often included in the term classical mechanics.

A second set of difficulties related to thermodynamics. When combined with thermodynamics, classical mechanics leads to the Gibbs paradox of classical statistical mechanics, in which entropy is not a well-defined quantity. Black-body radiation was not explained without the introduction of quanta. As experiments reached the atomic level, classical mechanics failed to explain, even approximately, such basic things as the energy levels and sizes of atoms and the photo-electric effect. The effort at resolving these problems led to the development of quantum mechanics.

Since the end of the 20th century, the place of classical mechanics in physics has been no longer that of an independent theory. Emphasis has shifted to understanding the fundamental forces of nature as in the Standard model and its more modern extensions into a unified theory of everything.[13] Classical mechanics is a theory for the study of the motion of non-quantum mechanical, low-energy particles in weak gravitational fields.

Limits of validity

Many branches of classical mechanics are simplifications or approximations of more accurate forms; two of the most accurate being general relativity and relativistic statistical mechanics. Geometric optics is an approximation to the quantum theory of light, and does not have a superior "classical" form.

The Newtonian approximation to special relativity

Newtonian, or non-relativistic classical momentum

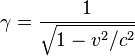

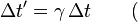

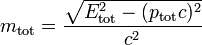

is the result of the first order Taylor approximation of the relativistic expression:

, where

, where

when expanded about

so it is only valid when the velocity is much less than the speed of light. Quantitatively speaking, the approximation is good so long as

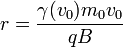

For example, the relativistic cyclotron frequency of a cyclotron, gyrotron, or high voltage magnetron is given by  , where fc is the classical frequency of an electron (or other charged particle) with kinetic energy T and (rest) mass m0 circling in a magnetic field. The (rest) mass of an electron is 511 keV. So the frequency correction is 1% for a magnetic vacuum tube with a 5.11 kV. direct current accelerating voltage.

, where fc is the classical frequency of an electron (or other charged particle) with kinetic energy T and (rest) mass m0 circling in a magnetic field. The (rest) mass of an electron is 511 keV. So the frequency correction is 1% for a magnetic vacuum tube with a 5.11 kV. direct current accelerating voltage.

The classical approximation to quantum mechanics

The ray approximation of classical mechanics breaks down when the de Broglie wavelength is not much smaller than other dimensions of the system. For non-relativistic particles, this wavelength is

where h is Planck's constant and p is the momentum.

Again, this happens with electrons before it happens with heavier particles. For example, the electrons used by Clinton Davisson and Lester Germer in 1927, accelerated by 54 volts, had a wave length of 0.167 nm, which was long enough to exhibit a single diffraction side lobe when reflecting from the face of a nickel crystal with atomic spacing of 0.215 nm. With a larger vacuum chamber, it would seem relatively easy to increase the angular resolution from around a radian to a milliradian and see quantum diffraction from the periodic patterns of integrated circuit computer memory.

More practical examples of the failure of classical mechanics on an engineering scale are conduction by quantum tunneling in tunnel diodes and very narrow transistor gates in integrated circuits.

Classical mechanics is the same extreme high frequency approximation as geometric optics. It is more often accurate because it describes particles and bodies with rest mass. These have more momentum and therefore shorter De Broglie wavelengths than massless particles, such as light, with the same kinetic energies.

Inertial frame of reference

In physics, an inertial frame of reference is a frame of reference which belongs to a set of frames in which physical laws hold in the same and simplest form. According to the first postulate of special relativity, all physical laws take their simplest form in an inertial frame, and there exist multiple inertial frames interrelated by uniform translation: [1]

Special principle of relativity: If a system of coordinates K is chosen so that, in relation to it, physical laws hold good in their simplest form, the same laws hold good in relation to any other system of coordinates K' moving in uniform translation relatively to K.

– Albert Einstein: The foundation of the general theory of relativity, Section A, §1

The principle of simplicity can be used within Newtonian physics as well as in special relativity; see Nagel[2] and also Blagojević.[3]

The laws of Newtonian mechanics do not always hold in their simplest form...If, for instance, an observer is placed on a disc rotating relative to the earth, he/she will sense a 'force' pushing him/her toward the periphery of the disc, which is not caused by any interaction with other bodies. Here, the acceleration is not the consequence of the usual force, but of the so-called inertial force. Newton's laws hold in their simplest form only in a family of reference frames, called inertial frames. This fact represents the essence of the Galilean principle of relativity:

The laws of mechanics have the same form in all inertial frames.– Milutin Blagojević: Gravitation and Gauge Symmetries, p. 4

In practical terms, the equivalence of inertial reference frames means that scientists within a box moving uniformly cannot determine their absolute velocity by any experiment (otherwise the differences would set up an absolute standard reference frame).[4][5] According to this definition, supplemented with the constancy of the speed of light, inertial frames of reference transform among themselves according to the Poincaré group of symmetry transformations, of which the Lorentz transformations are a subgroup.[6]

The expression inertial frame of reference (German: Inertialsystem) was coined by Ludwig Lange in 1885, to replace Newton's definitions of "absolute space and time" by a more operational definition.[7][8] As referenced by Iro, Lange proposed:[9]

A reference frame in which a mass point thrown from the same point in three different (non co-planar) directions follows rectilinear paths each time it is thrown, is called an inertial frame.

– L. Lange (1885) as quoted by Max von Laue in his book (1921) Die Relativitätstheorie, p. 34, and translated by Iro

A discussion of Lange's proposal can be found in Mach.[10]

The inadequacy of the notion of "absolute space" in Newtonian mechanics is spelled out by Blagojević:[11]

*The existence of absolute space contradicts the internal logic of classical mechanics since, according to Galilean principle of relativity, none of the inertial frames can be singled out.

*Absolute space does not explain inertial forces since they are related to acceleration with respect to any one of the inertial frames.

*Absolute space acts on physical objects by inducing their resistance to acceleration but it cannot be acted upon.– Milutin Blagojević: Gravitation and Gauage Symmetries, p. 5

The utility of operational definitions was carried much further in the special theory of relativity.[12] Some historical background including Lange's definition is provided by DiSalle, who says in summary: [13]

The original question, “relative to what frame of reference do the laws of motion hold?” is revealed to be wrongly posed. For the laws of motion essentially determine a class of reference frames, and (in principle) a procedure for constructing them.

Contents |

Newton's inertial frame of reference

. Frame S' has an arbitrary but fixed rotation with respect to frame S. They are both inertial frames provided a body not subject to forces appears to move in a straight line. If that motion is seen in one frame, it will also appear that way in the other.

. Frame S' has an arbitrary but fixed rotation with respect to frame S. They are both inertial frames provided a body not subject to forces appears to move in a straight line. If that motion is seen in one frame, it will also appear that way in the other.Within the realm of Newtonian mechanics, an inertial frame of reference, or inertial reference frame, is one in which Newton's first law of motion is valid.[14] However, the principle of special relativity generalizes the notion of inertial frame to include all physical laws, not simply Newton's first law.

Newton viewed the first law as valid in any reference frame moving with uniform velocity relative to the fixed stars;[15] that is, neither rotating nor accelerating relative to the stars.[16] Today the notion of "absolute space" is abandoned, and an inertial frame in the field of classical mechanics is defined as:[17][18]

An inertial frame of reference is one in which the motion of a particle not subject to forces is in a straight line at constant speed.

Hence, with respect to an inertial frame, an object or body accelerates only when a physical force is applied, and (following Newton's first law of motion), in the absence of a net force, a body at rest will remain at rest and a body in motion will continue to move uniformly—that is, in a straight line and at constant speed. Newtonian inertial frames transform among each other according to the Galilean group of symmetries.

If this rule is interpreted as saying that straight-line motion is an indication of zero net force, the rule does not identify inertial reference frames, because straight-line motion can be observed in a variety of frames. If the rule is interpreted as defining an inertial frame, then we have to be able to determine when zero net force is applied. The problem was summarized by Einstein:[19]

The weakness of the principle of inertia lies in this, that it involves an argument in a circle: a mass moves without acceleration if it is sufficiently far from other bodies; we know that it is sufficiently far from other bodies only by the fact that it moves without acceleration.

– Albert Einstein: The Meaning of Relativity, p. 58

There are several approaches to this issue. One approach is to argue that all real forces drop off with distance from their sources in a known manner, so we have only to be sure that we are far enough away from all sources to insure that no force is present.[20] A possible issue with this approach is the historically long-lived view that the distant universe might affect matters (Mach's principle). Another approach is to identify all real sources for real forces and account for them. A possible issue with this approach is that we might miss something, or account inappropriately for their influence (Mach's principle again?). A third approach is to look at the way the forces transform when we shift reference frames. Fictitious forces, those that arise due to the acceleration of a frame, disappear in inertial frames, and have complicated rules of transformation in general cases. On the basis of universality of physical law and the request for frames where the laws are most simply expressed, inertial frames are distinguished by the absence of such fictitious forces.

Newton enunciated a principle of relativity himself in one of his corollaries to the laws of motion:[21][22]

The motions of bodies included in a given space are the same among themselves, whether that space is at rest or moves uniformly forward in a straight line.

– Isaac Newton: Principia, Corollary V, p. 88 in Andrew Motte translation

This principle differs from the special principle in two ways: first, it is restricted to mechanics, and second, it makes no mention of simplicity. It shares with the special principle the invariance of the form of the description among mutually translating reference frames.[23] The role of fictitious forces in classifying reference frames is pursued further below.

Non-inertial reference frames

- See also: Non-inertial frame and Rotating spheres

Inertial and non-inertial reference frames can be distinguished by the absence or presence of fictitious forces, as explained shortly.[24][25]

The effect of his being in the noninertial frame is to require the observer to introduce a fictitious force into his calculations….

– Sidney Borowitz and Lawrence A Bornstein in A Contemporary View of Elementary Physics, p. 138

The presence of fictitious forces indicates the physical laws are not the simplest laws available so, in terms of the special principle of relativity, a frame where fictitious forces are present is not an inertial frame:[26]

The equations of motion in an non-inertial system differ from the equations in an inertial system by additional terms called inertial forces. This allows us to detect experimentally the non-inertial nature of a system.

– V. I. Arnol'd: Mathematical Methods of Classical Mechanics Second Edition, p. 129

Bodies in non-inertial reference frames are subject to so-called fictitious forces (pseudo-forces); that is, forces that result from the acceleration of the reference frame itself and not from any physical force acting on the body. Examples of fictitious forces are the centrifugal force and the Coriolis force in rotating reference frames.

How then, are "fictitious' forces to be separated from "real" forces? It is hard to apply the Newtonian definition of an inertial frame without this separation. For example, consider a stationary object in an inertial frame. Being at rest, no net force is applied. But in a frame rotating about a fixed axis, the object appears to move in a circle, and is subject to centripetal force (which is made up of the Coriolis force and the centrifugal force). How can we decide that the rotating frame is a non-inertial frame? There are two approaches to this resolution: one approach is to look for the origin of the fictitious forces (the Coriolis force and the centrifugal force). We will find there are no sources for these forces, no originating bodies.[27] A second approach is to look at a variety of frames of reference. For any inertial frame, the Coriolis force and the centrifugal force disappear, so application of the principle of special relativity would identify these frames where the forces disappear as sharing the same and the simplest physical laws, and hence rule that the rotating frame is not an inertial frame.

Newton examined this problem himself using rotating spheres, as shown in Figure 2 and Figure 3. He pointed out that if the spheres are not rotating, the tension in the tying string is measured as zero in every frame of reference.[28] If the spheres only appear to rotate (that is, we are watching stationary spheres from a rotating frame), the zero tension in the string is accounted for by observing that the centripetal force is supplied by the centrifugal and Coriolis forces in combination, so no tension is needed. If the spheres really are rotating, the tension observed is exactly the centripetal force required by the circular motion. Thus, measurement of the tension in the string identifies the inertial frame: it is the one where the tension in the string provides exactly the centripetal force demanded by the motion as it is observed in that frame, and not a different value. That is, the inertial frame is the one where the fictitious forces vanish. (See Rotating spheres for original text and mathematical formulation.)

So much for fictitious forces due to rotation. However, for linear acceleration, Newton expressed the idea of undetectability of straight-line accelerations held in common:[22]

If bodies, any how moved among themselves, are urged in the direction of parallel lines by equal accelerative forces, they will continue to move among themselves, after the same manner as if they had been urged by no such forces.

– Isaac Newton: Principia Corollary VI, p. 89, in Andrew Motte translation

This principle generalizes the notion of an inertial frame. For example, an observer confined in a free-falling lift will assert that he himself is a valid inertial frame, even if he is accelerating under gravity, so long as he has no knowledge about anything outside the lift. So, strictly speaking, inertial frame is a relative concept. With this in mind, we can define inertial frames collectively as a set of frames which are stationary or moving at constant velocity with respect to each other, so that a single inertial frame is defined as an element of this set.

For these ideas to apply, everything observed in the frame has to be subject to a base-line, common acceleration shared by the frame itself. That situation would apply, for example, to the elevator example, where all objects are subject to the same gravitational acceleration, and the elevator itself accelerates at the same rate.

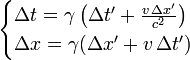

Newtonian mechanics

Classical mechanics, which includes relativity, assumes the equivalence of all inertial reference frames. Newtonian mechanics makes the additional assumptions of absolute space and absolute time. Given these two assumptions, the coordinates of the same event (a point in space and time) described in two inertial reference frames are related by a Galilean transformation

where  and t0 represent shifts in the origin of space and time, and

and t0 represent shifts in the origin of space and time, and  is the relative velocity of the two inertial reference frames. Under Galilean transformations, the time between two events (t2 − t1) is the same for all inertial reference frames and the distance between two simultaneous events (or, equivalently, the length of any object,

is the relative velocity of the two inertial reference frames. Under Galilean transformations, the time between two events (t2 − t1) is the same for all inertial reference frames and the distance between two simultaneous events (or, equivalently, the length of any object,  ) is also the same.

) is also the same.

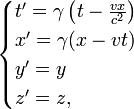

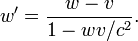

Special relativity

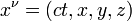

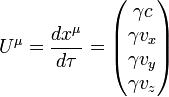

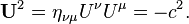

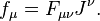

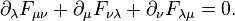

Special relativity (SR) (also known as the special theory of relativity or STR) is the physical theory of measurement in inertial frames of reference proposed in 1905 by Albert Einstein (after the considerable and independent contributions of Hendrik Lorentz and Henri Poincaré and others) in the paper "On the Electrodynamics of Moving Bodies".[1] It generalizes Galileo's principle of relativity – that all uniform motion is relative, and that there is no absolute and well-defined state of rest (no privileged reference frames) – from mechanics to all the laws of physics, including both the laws of mechanics and of electrodynamics, whatever they may be.[2] Special relativity incorporates the principle that the speed of light is the same for all inertial observers regardless of the state of motion of the source.[3]

This theory has a wide range of consequences which have been experimentally verified, [4] including counter-intuitive ones such as length contraction, time dilation and relativity of simultaneity, contradicting the classical notion that the duration of the time interval between two events is equal for all observers. (On the other hand, it introduces the space-time interval, which is invariant.) Combined with other laws of physics, the two postulates of special relativity predict the equivalence of matter and energy, as expressed in the mass-energy equivalence formula E = mc2, where c is the speed of light in a vacuum.[5][6] The predictions of special relativity agree well with Newtonian mechanics in their common realm of applicability, specifically in experiments in which all velocities are small compared to the speed of light.

The theory is termed "special" because it applies the principle of relativity only to inertial frames. Einstein developed general relativity to apply the principle more generally, that is, to any frame, including accelerating frames, and that theory includes the effects of gravity.

Special relativity reveals that c is not just the velocity of a certain phenomenon, namely the propagation of electromagnetic radiation (light)—but rather a fundamental feature of the way space and time are unified as spacetime. A consequence of this is that it is impossible for any particle that has mass to be accelerated to the speed of light.

Postulates

In his autobiographical notes published in November 1949 Einstein described how he had arrived at the two fundamental postulates on which he based the special theory of relativity. After describing in detail the state of both mechanics and electrodynamics at the beginning of the 20th century, he wrote

"Reflections of this type made it clear to me as long ago as shortly after 1900, i.e., shortly after Planck's trailblazing work, that neither mechanics nor electrodynamics could (except in limiting cases) claim exact validity. Gradually I despaired of the possibility of discovering the true laws by means of constructive efforts based on known facts. The longer and the more desperately I tried, the more I came to the conviction that only the discovery of a universal formal principle could lead us to assured results... How, then, could such a universal principle be found?"[7]

He discerned two fundamental propositions that seemed to be the most assured, regardless of the exact validity of either the (then) known laws of mechanics or electrodynamics. These propositions were:(1) the constancy of the velocity of light, and (2) the independence of physical laws (especially the constancy of the velocity of light) from the choice of inertial system. In his initial presentation of special relativity in 1905 he expressed these postulates as:[8]

- The Principle of Relativity - The laws by which the states of physical systems undergo change are not affected, whether these changes of state be referred to the one or the other of two systems in uniform translatory motion relative to each other.

- The Principle of Invariant Light Speed - Light in vacuum propagates with the speed c (a fixed constant) in terms of any system of inertial coordinates, regardless of the state of motion of the light source.

It should be noted that the derivation of special relativity depends not only on these two explicit postulates, but also on several tacit assumptions (which are made in almost all theories of physics), including the isotropy and homogeneity of space and the independence of measuring rods and clocks from their past history.[9]

Following Einstein's original presentation of special relativity in 1905, many different sets of postulates have been proposed in various alternative derivations.[10] However, the most common set of postulates remains those employed by Einstein in his original paper. A more mathematical statement of the Principle of Relativity made later by Einstein, which introduces the concept of simplicity not mentioned above is:[11]

Special principle of relativity: If a system of coordinates K is chosen so that, in relation to it, physical laws hold good in their simplest form, the same laws hold good in relation to any other system of coordinates K' moving in uniform translation relatively to K.

– Albert Einstein: The foundation of the general theory of relativity, Section A, §1

The two postulates of special relativity imply the applicability to physical laws of the Poincaré group of symmetry transformations, of which the Lorentz transformations are a subset, thereby providing a mathematical framework for special relativity. Many of Einstein's papers present derivations of the Lorentz transformation based upon these two principles.[12]

Einstein consistently based the derivation of Lorentz invariance (the essential core of special relativity) on just the two basic principles of relativity and light-speed invariance. He wrote:

"The insight fundamental for the special theory of relativity is this: The assumptions relativity and light speed invariance are compatible if relations of a new type ("Lorentz transformation") are postulated for the conversion of coordinates and times of events... The universal principle of the special theory of relativity is contained in the postulate: The laws of physics are invariant with respect to Lorentz transformations (for the transition from one inertial system to any other arbitrarily chosen inertial system). This is a restricting principle for natural laws..."[7]

Thus many modern treatments of special relativity base it on the single postulate of universal Lorentz covariance, or, equivalently, on the single postulate of Minkowski spacetime.[13][14]

Mass-energy equivalence

- See also: Mass in special relativity

In addition to the papers referenced above—which give derivations of the Lorentz transformation and describe the foundations of special relativity—Einstein also wrote at least four papers giving heuristic arguments for the equivalence (and transmutability) of mass and energy (the famous formula E = m c2).

Mass-energy equivalence does not follow from the two basic postulates of special relativity by themselves.[15] The first of Einstein's papers on this subject was Does the Inertia of a Body Depend upon its Energy Content? in 1905.[16] In this first paper and in each of his subsequent three papers on this subject,[17] Einstein augmented the two fundamental principles by postulating the relations involving momentum and energy of electromagnetic waves implied by Maxwell's equations (the assumption of which, of course, entails among other things the assumption of the constancy of the speed of light). It has been suggested that Einstein's original argument was fallacious.[18] Other authors suggest that the argument was merely inconclusive by virtue of some implicit assumptions lacking experimental verification at the time. [19]

Einstein acknowledged in his 1907 survey paper on special relativity that it was problematic to rely on Maxwell's equations for the heuristic mass-energy argument.[20] [21]

Lack of an absolute reference frame